Model behaviour

-

- from Shaastra :: vol 04 issue 06 :: Jul 2025

Foundation models in biology are paving the way for a better understanding of diseases. Here's how.

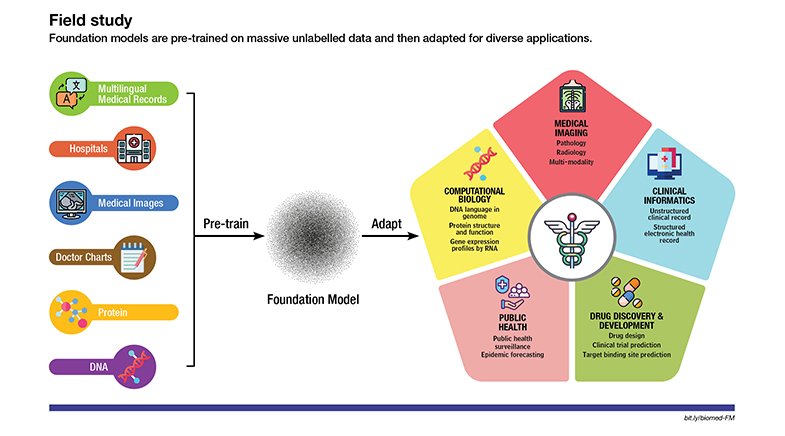

The union of artificial intelligence (AI) and biology is heralding a new era in biological research. At the heart of this alliance are foundation models: a class of AI programs based on large language models (LLMs) or vision language models (VLMs). Unlike traditional AI, foundation models are pre-trained on massive and diverse datasets and can be adapted to many biological tasks.

What does a foundation model do?

Just like the LLMs powering popular AI applications, such as ChatGPT, that predict the next word in a sentence by recognising patterns in previously written texts, the biology foundation models can predict DNA, RNA, protein sequences, and more based on the data they have been trained on. They are also capable of adapting to multiple applications. For example, Evo2 is a foundation model trained on DNA data, but it also works well with RNA and protein sequences.

Training a foundation model is a two-step process. The first step is pre-training, which calls for a massive amount of data. In this step, the model learns the patterns in the input data and develops an understanding of the language's structure and syntax. This could be the language of DNA, RNA, or X-ray images. The next step for a pre-trained model is fine-tuning. In this step, the model is further trained on smaller, task-specific datasets, allowing it to be adapted for various use cases. For example, a model pre-trained on a large dataset of medical images can be fine-tuned with a smaller dataset to detect a specific disease. And this process can be repeated for several diseases.

The foundation model's ability to adapt and integrate diverse datasets is particularly useful in biology research as it allows for a more holistic view of biological processes. It also opens up opportunities for exploring novel connections between diverse datasets, thereby creating new avenues for research. It is no wonder, then, that researchers across domains are developing or using foundation models. A search for the term "foundation model" on PubMed, a free resource platform provided by the U.S. National Library of Medicine, yielded 33 papers in 2023. The number shot up to 174 in 2024, and had reached 200 till July 2025.

Where are the models being used?

These models have already begun to impact outcomes in biology labs and clinics. For example, Google DeepMind's AlphaFold2 has been used to predict the structure of millions of proteins, saving time and effort in laboratories. Its impact was so significant that it won its founders the Nobel Prize in Chemistry in 2024. Likewise, Geneformer, a foundation model to explain gene interactions, helped identify genes that could be targeted in cardiomyopathy (a heart muscle disease) to restore normal function.

Who is developing them?

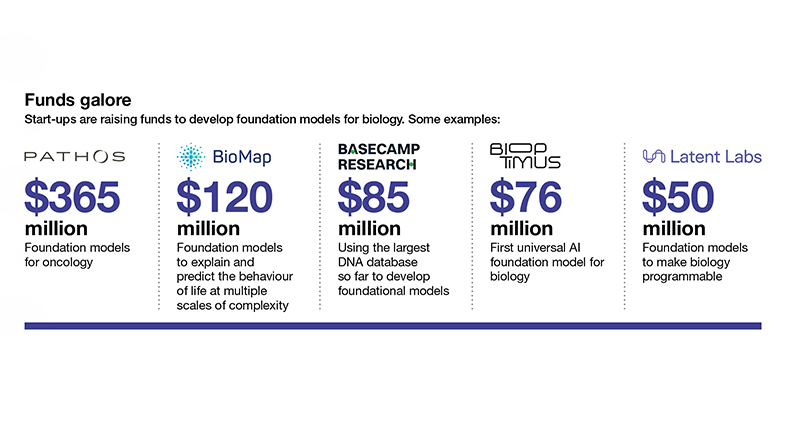

The idea of a foundation model was first proposed in August 2021 by Stanford University's Center for Research on Foundation Models (CRFM). Since then, many other academic institutions and companies have developed their own models. Large tech companies, such as Google, Meta, IBM, and NVIDIA, are creating these models. Start-ups, too, have raised funds to develop foundation models for biology (see graphic: 'Funds galore').

What are the challenges?

Despite the rapidly increasing interest in the subject and the potential of foundation models, they are not without challenges. Their success depends on three critical parameters: large datasets, powerful computing, and generative AI algorithms. Access to such datasets is not easy and is sometimes limited because of privacy and security concerns. The cost of powerful compute is another barrier. Not surprisingly, much of this development is concentrated in Western nations, which have greater access to such compute. There are also ethical concerns about over-reliance on AI and questions about whether it would diminish the role of researchers.

Despite the potential of foundation models, they are not without challenges. Success depends on three critical parameters: large datasets, powerful computing, and generative AI algorithms.

But AI and the opportunities it creates for biology research cannot be ignored. As a June 2025 article in PLOS Biology put it, some ideas about the way forward come from successful examples of foundation models — such as AlphaFold and RETFound — that are open-source and have made their data freely available (bit.ly/Foundation-Models). "The next scientific revolution will come from teams who can judiciously steer AI, knowing when to trust it, when to adjust its course, and when to drive it into uncharted territory," it says.

Have a

story idea?

Tell us.

Do you have a recent research paper or an idea for a science/technology-themed article that you'd like to tell us about?

GET IN TOUCH