User discretion is advised

-

- from Shaastra :: vol 04 issue 11 :: Dec 2025

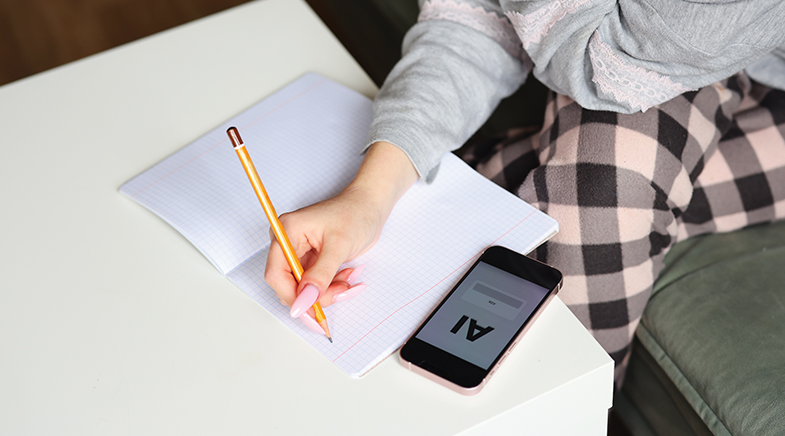

For better learning, treat AI as a sparring partner and not a substitute for thought, experts suggest.

The schoolteachers and principals Yizhou Fan met in 2023 all had one question for the Beijing-based Assistant Professor. Would they lose their jobs because of the growing use of artificial intelligence (AI)? OpenAI had just released the AI platform GPT-4, and the educators were concerned. Could students learn with just AI, many asked Fan, who taught at the Graduate School of Education, Peking University.

To address the query, Fan started a randomised experimental lab study: he sought to measure learners' motivation and performance while completing a writing task in two scenarios: when they relied on ChatGPT (OpenAI's advanced AI chatbot), and when they worked with human experts.

Fan and his colleagues found that using ChatGPT improves learners' performance in the short term but makes them more reliant on AI. The practice promoted metacognitive laziness: a tendency to avoid the cognitive effort and self-regulation required for deep learning. The learner group working with human experts or teachers undertook activities that deepened their engagement with the task — for instance, making plans, understanding the work, evaluating their writing, and monitoring the learning journey.

Since the early 2000s, many countries have recorded a reverse Flynn effect, a slowdown, decline or plateau in the average IQ.

The AI group, on the other hand, would ask AI a question and work for a while; they repeated the process until the task was complete. "They ended up writing a beautiful essay within an hour, but when the AI scaffolding is gone, they can't maintain their performance," says Fan. Teachers may not care much about short-term performance, but they do care whether the learner really learns to write an essay, he explains. "The learning journey is slow, right? AI can quickly help improve your performance, but the human teacher will trigger a deeper-level reflection on your learning," he adds.

Studies, such as the work conducted by Fan (bit.ly/AI-laziness), have generated a growing body of evidence on the negative impact of indiscriminate AI use on the learning journey of school and college students. Researchers from the Massachusetts Institute of Technology, in a 2025 study (bit.ly/MIT-learning), used EEG (electroencephalography) to assess learners' cognitive engagement during an essay-writing task. Learners were divided into three groups: ChatGPT users, search engine users, and the 'brain-only' group. The 'brain-only' users displayed the highest cognitive engagement, and the ChatGPT users showed the least.

In short, we safeguard our mental abilities not by avoiding AI, but by using it deliberately and reflectively, says Gerlich.

Over the course of the four-month study, the ChatGPT users performed worse than their counterparts in the 'brain-only' group across all levels: neural, linguistic and scoring. "The LLM [ChatGPT] undeniably reduced the friction involved in answering participants' questions compared to the search engine. However, this convenience came at a cognitive cost, diminishing users' inclination to critically evaluate the LLM's output or 'opinions'," observe the researchers. Another 2025 study (bit.ly/AI-criticalthinking) by the SBS Swiss Business School found a significant negative correlation between frequent use of AI tools and critical thinking abilities. The decline in critical thinking abilities in frequent AI users, the study notes, was mediated by cognitive offloading — using AI tools to reduce the mental effort required to complete a task.

OUTSOURCING THOUGHT

Cognitive offloading is not a new concept: web search, calculator, and even notes are external aids that free up cognitive resources for complex tasks. AI is merely the latest entrant. Past studies have analysed the impact of tools on cognitive processes. A 2011 study (bit.ly/Google-Memory) in Science on the cognitive consequences of Google/web search found that when people knew they could access important information on the web, they were less likely to recall the information but more likely to remember where to access it. "The internet has become a primary form of external or transactive memory, where information is stored collectively outside ourselves," says the study.

Over the last couple of decades, digital tools have become increasingly more powerful and pervasive. These tools have reduced the need to hold information in your mind. Why remember when you can look it up?

The outsourcing, however, comes at a cost. Research shows that cognitive offloading is associated with a decline in critical thinking abilities, and even intelligence. Since the early 2000s, many countries have recorded a reverse Flynn effect, a slowdown, decline or plateau in the average IQ. The Flynn effect is the sustained rise in human IQ across the globe since it was first observed by James Flynn in 1984. A 2023 study (bit.ly/Reverse-Flynn) in Intelligence, for instance, showed a reverse Flynn effect from 2006 to 2018 in a test sample of nearly 400,000 Americans. The effect was most pronounced among those aged 18-22 and those with lower levels of education. This was unprecedented.

The reported decline is behavioural rather than genetic, notes Michael Gerlich, Professor of Management and Head, Centre for Strategic Corporate Foresight and Sustainability at the SBS Swiss Business School. "My findings indicate a growing pattern of digital dependence — people outsource reasoning to AI or digital systems, and gradually invest less cognitive effort. Over time, this can resemble a reverse Flynn effect: a societal decline in active reasoning and independent thought. The issue is not intelligence itself, but mental engagement. When thinking becomes optional, reflection declines," he says.

BUILDING BLOCKS

Cognitive offloading derails learning and thinking abilities. To understand how that happens, it is essential to understand how the brain learns new ideas and stores them. Students rely on a dual memory system in the brain when they learn something new: declarative memory to recall facts, and procedural memory for skills that become second nature. Effective learning happens when knowledge moves from the declarative to the procedural system, and that movement depends entirely on practising a skill or a fact in multiple ways so that it is embedded in the neural circuits.

New knowledge and learning are built on the foundations of existing knowledge. Repeated recall, practice and linking new ideas with familiar concepts develop neural circuits for new knowledge. Constantly looking up things instead of internalising them leads to a poorly developed neural circuitry, and limits "deep understanding and cross-domain thinking", says a 2025 study (bit.ly/Limit-Thinking). "Over time, it makes it hard to recognise patterns and connect new information to existing information in our mind," it observes.

Barbara Oakley, a co-author of the study and Distinguished Professor of Engineering at Oakland University, points out that when people try to creatively learn something, they must have some "base knowledge" in them. "And, if you don't have that in your head, if you've got it offloaded somewhere else, you can't begin to see what those connections [to pre-existing information in the brain] are," says Oakley, who also teaches 'Learning How to Learn', an immensely popular massive open online course (MOOC). "If I were a poet and somebody came to me and said, 'Here is a great physics theorem', with no conceptual understanding, none of the neural connections about physics pre-existing in my brain, I couldn't understand what this marvellous new idea was," she explains.

Learners struggle to develop a complex or nuanced understanding without a base of internalised knowledge. As Oakley and her colleagues observe in the study, "In an age saturated with external information, genuine insight still depends on robust internal knowledge."

AI AS A COLLEAGUE

Does that mean learners should not use AI at all? The answer is no; it has to be used — but in a way that enhances, or at least maintains, cognitive engagement. In an October 2025 study, Gerlich discusses structured prompting — an intentional approach to using AI that keeps the human in the loop. "[In structured prompting], instead of asking AI to 'write for me', users follow a reflective process: first form their own ideas, then use AI for targeted information, challenge the response with counter-questions, and finally revise independently. It transforms AI from a substitute into a sparring partner. My data show that this approach reduces cognitive offloading and increases reflective engagement. In short, we safeguard our mental abilities not by avoiding AI, but by using it deliberately and reflectively," he says.

Gerlich uses AI in his day-to-day work, but with the same discipline he advocates in his research. "If AI simplifies a task without deepening understanding, I stop; if it challenges my thinking, I continue," he observes.

Classrooms, learning environments, and testing setups need to be redesigned to integrate AI use in a way that fosters cognitive growth.

Every researcher interviewed for this report told Shaastra they used AI in their everyday work, but with discretion. "I'm often asking it to burnish the edges of what I am already doing and what I already know," says Oakley. "I love AI, because it is like having a colleague at hand who has a different set of expertise. Would I trust even my most experienced colleague to be an absolute expert on whatever I'm writing about? Not necessarily, but I certainly get a lot of help from my colleagues," she adds.

Young learners with limited domain knowledge may lack that discernment and use AI in ways that do not support cognitive growth. "Younger participants tended to use AI for fast task completion, summarising, rewriting, or generating text, often without questioning its content. Older or more educated participants, on the other hand, used AI more selectively, mainly to cross-check or refine their own reasoning," Gerlich notes in his study. "Yet when younger users received a short training in structured prompting, their reasoning quality improved dramatically."

DEEPER OVER FASTER

Classrooms, learning environments, and testing set-ups need to be redesigned to integrate AI in ways that foster cognitive growth. "If you're trying to ensure that a college student is learning how to write well, you better be testing that student when they are not able to access ChatGPT," says Oakley. The approach ensures they learn to write well, but also know what to look for in good writing when they use ChatGPT, she explains.

Fan is in favour of teaching metacognition skills — the ability to reflect on, monitor, and evaluate learning goals and outcomes — to students in addition to the subject. It is not just enough to write a good essay, but to "demonstrate the thinking behind writing a good essay", he says.

Closer home, at the Indian Institute of Technology Kanpur, Anveshna Srivastava, Assistant Professor in the Department of Cognitive Science, too, advocates weaving metacognitive strategies into pedagogy to help students reflect, evaluate, and develop a sense of ownership over learning. "I generally use counterfactual ('what if') reasoning to probe students' current understanding and encourage them to engage with different possibilities of the current conceptual scenario," she says. Such practices reduce cognitive debt and help students engage meaningfully with the subject, she explains, adding that classrooms must prioritise deeper learning over quick performance.

The schoolteachers Fan met in 2023 are adapting to the ubiquity of AI. They have moved on from shock and worry to a more structured understanding of their role and that of AI in classrooms, of co-existing with Al, while continuing to matter in the learning journey of students.

Have a

story idea?

Tell us.

Do you have a recent research paper or an idea for a science/technology-themed article that you'd like to tell us about?

GET IN TOUCH